A discussion thread I got pulled into on Mastodon had me suddenly nostalgic for something we once had on the Internet, and which was really nice while it lasted: the Extensible Messaging and Presence Protocol, or XMPP, or sometimes referred to by its original name, Jabber.

What kicked off the discussion was somebody asking about a more modern "decentralized chat platform" known as Matrix (not the movie). A lot of people online talk about how they like Matrix, but I one time had a go at self-hosting my own Matrix node (which I feel I should rant about briefly, below), and the discussion turned back towards something that we used to have which was (in my opinion) loads better, XMPP.

So I haven't posted a good rant on my blog in quite some time - I had chilled out a lot in my older years, but I just have to tell this story about Safari and my struggles in getting it to work with my chat room I had built recently.

My chat room is a fairly basic app - it's open source and written "from scratch" using WebSockets to handle the client/server communication and they pass basic JSON messages around. All fairly standard stuff and shouldn't be a big ask for any modern web browser that supports modern web standards. It works flawlessly in Google Chrome as well as all other Chromium derivatives (including Edge, Brave, etc.), and it works flawlessly on Firefox, and when you run either browser on your Windows, Linux or Mac OS computer. It even works great on all Androids, too - using Chromium or Firefox browser engines.

But then there's Safari. Safari "supports" WebSockets but it doesn't do so very well and I've been fighting this for weeks now trying to chip away at this problem. Both when you run Safari on your Mac OS Ventura desktop or on your iPhone or iPad, Safari is very easy to overwhelm and it will disconnect from the chat room at the slightest hiccup of an issue.

My rant here actually is about three different problems that have made my life difficult trying to get Safari to work:

I don't own a Macbook or an iOS device and so would have no way to even debug or look into this problem, but at least there is an option to run Mac OS inside of a virtual machine (using something like OSX-KVM) so at least I can look into the desktop Safari browser and see what its deal is.

First - here is how my chat basically works: you connect, send your username to log in, the chat tells everyone you joined, sends everyone the new Who's Online roster, and sends some "welcome messages" to the one who joined where I can send my rules or new feature announcements to you.

What I would see when a Safari user logged in was: they'd connect, log in, receive all those welcome messages and then they would immediately hangup the connection and log off. On their side, the web browser gives a very unhelpful error message regarding the WebSocket:

Protocol error.

That's it - it doesn't say what the error was. Even when I have a Safari browser in front of me, they give me no help at all to find out what's wrong!

Through trial and error, I found out:

...and that kind of thoroughly sucks. I can remove all the welcome messages so as to allow Safari to at least log on, but then just one user posting a paragraph of text will kick all the Safari users out of the chat room!

Chrome and Firefox don't experience this issue. A while ago I added picture sharing in chat - you send your picture over WebSocket and it echoes it as a data: URL to all the chatters, passing those bytes directly back out; Firefox and Chrome can cope with this perfectly, but that would for sure kick Safari users off for too long of a message!

So, when it comes to Mac OS users I can tell them: Chrome and Firefox work better. But this advice does not fly on iPads or iPhones because, per Apple's rules, web browsers on iOS are not allowed to bring their own browser engines - they all are just custom wrappers around Mobile Safari.

And you guessed it: Mobile Safari doesn't like my WebSockets either!

I am hoping that with EU pressure placed on Apple to where they will allow competing browser engines to join their platform, that at least some of my iOS users will have a way to use my chat room. But how it stands currently is: iPads and iPhones simply can't use my chat room at all, or if they can get on (maybe it's the "too long of message" issue with them as well), they will be fragile and easy to boot offline just by writing too long of a message.

Apple will not innovate on Safari and make it support modern web standards, and they've made sure that nobody else can innovate either. The Blink engine from Chromium and Gecko from Firefox both work great with WebSockets and if only they were allowed to exist in their true forms on Apple mobiles, I wouldn't be ranting about this right now, I could just say "don't use Safari."

And side rant: the reason Apple won't allow Chrome or Firefox to compete with them is because they are scared shitless about Progressive Web Apps, which could compete with their native app store. They won't innovate on Safari at all until their feet are held to the fire (thanks for that as well, EU!), their web browser sucks (as this WebSockets thing illustrates), they're holding everybody back - the new Internet Explorer 6.0!

Even if I owned an iPad, it wouldn't help - you can't get to the browser logs on an iPad browser to even see what kind of error message it's throwing. Though I imagine the error message would be just as helpful as desktop Safari, anyway: "Protocol error."

In order to get logs off an iOS web browser, you need to pair it with a Macbook computer -- the only kind of device that is allowed to run the iOS development kits and is the only way to debug anything that happens on your iPad.

With Mac OS, at least there is a way I can spin up a virtual machine and get my hands on Safari. There is no way to do this for iOS. There's no virtual machine where I can run the genuine Mobile Safari app and have a look at this issue myself. I wish Apple weren't so closed in their ecosystem - comparing it to Android for example, you can debug an Android app using any kind of computer: Windows, Linux, Mac OS, even another Android, even on-device on the same Android.

I am not an iOS developer, I don't care to be an iOS developer, and I don't own any Apple hardware, and it really sucks when I run into a web app issue that should have nothing to do with Apple specific software, and I simply can not even get in and look at the error messages.

I'd been chipping away at this for weeks, basically blindly trying things and throwing shit at the walls and hoping one of my Apple using friends tries my chat room once in a while to see if any of my efforts have worked (spoiler: they haven't worked).

I've also tried reaching out to developers on Mastodon and other social media: my chat room is open source, could somebody with Apple hardware help me debug and see if they can find out what I can do better to get Safari to like my chat room. Maybe I didn't reach the right ears because I've gotten only crickets in response. Meanwhile about a third of my users still can not get onto my chat room at all.

Where I've landed so far is: it seems Safari can connect, but that < 500 character limit issue seems horribly broken and I don't want Safari users getting kicked off left and right by surprise, it's a bad user experience.

If I wait it out long enough (and if the EU is successful), Apple may permit actually good web browser engines on their platform and then my chat will work perfectly on them. It may just take a couple of years though - first Apple would have to be successfully sued into submission, and then Google/Mozilla would have to port their browser engines over which could be its own long tail of development work.

Maybe as a consequence of that regulation, Apple will actually put some new work into Safari instead of just neglecting it and they'll fix their shit. Currently it seems like my WebSocket messages need to be smaller than a tweet on Twitter, and it honestly won't be worth all the effort it would take me to reimagine my whole chat room and come up with a clever protocol to break messages apart into tiny bite sized chunks when it's only one, non-standards compliant web browser, which drags its stick thru the mud as badly as Internet Explorer ever did, that has the issue here.

So are they the new Internet Explorer, or somehow even worse?

I have sometimes had people visit my blog because they were Googling around in general about Apple and they like to fight me in the comments because I dissed their favorite brand. If this is you, at least say something constructive in the comments: have you ever built a WebSockets app and do you have experience to share in how to get Safari to behave? If you're simply an end user fanboy and never installed Xcode in your life, save your comments, please.

Probably one of the most frustrating things to deal with as a software developer is time. Specifically time zones and daylight savings time and all that nonsense. Tom Scott has a video about it but I have a recent story of my own.

This week at work, we lost about two days of effort trying to make our web application timezone-aware. Apparently, time zones are so ridiculously complicated, that it's basically impossible to store a time with an arbitrary time zone in your database while remembering what the time zone is.

Don't believe me?

The web stack in this story involves JavaScript, Python and PostgreSQL, with PostgresSQL's problems being applicable to any backend stack.

Manually managing a music collection of MP3 files on disk is such a pain in the ass that I felt like blogging about it.

First, you have cloud music services like Google Play Music which can't detect duplicates properly.

In user interface and software design, the principle of least astonishment states that "if a necessary feature has a high astonishment factor, it may be necessary to redesign the feature." It means that your user interface should behave in a way that the user expects, based on their prior knowledge of how similar interfaces behave.

This is a rant about Mac OS X.

This is something I wanted to rant about for a while: event loops in programming.

This post is specifically talking about programming languages that don't have their own built-in async model, and instead left it up to the community to create their own event loop modules instead. And how most of those modules don't get along well with the others.

I jumped ship from GNOME 2 to XFCE when GNOME 3 was announced and have ranted about it endlessly, but then I decided to give GNOME 3.14 (Fedora 21) a try.

I still installed Fedora XFCE on all the PCs I care about, and decided my personal laptop was the perfect guinea pig for GNOME because I never do anything with that laptop and wouldn't mind re-formatting it again for XFCE if I turn out not to like Gnome.

After scouring the GNOME Shell extensions I installed a handful that made my desktop somewhat tolerable:

And then I found way too many little papercuts, some worse than others. My brief list:

Settings weren't always respected very well, and some apps would need to be "coerced" into actually looking at their settings. For example, I configured the GNOME Terminal to use a transparent background. It worked when I first set it up, but then it would rarely work after that. If I opened a new terminal, the background would be solid black. Adjusting the transparency setting now had no effect. Sometimes, opening and closing a tab would get GNOME Terminal to actually read its settings and turn transparent. Most of the time though, it didn't, and nothing I could do would get the transparency to come back on. It all depended on the alignment of the stars and when GNOME Terminal damn well feels like it.

Also, I use a left handed mouse, and GNOME Shell completely got confused after a reboot. The task bar and window buttons (maximize, close, etc.) and other Shell components would be right handed, while the actual apps I use would be left handed. So, clicking the scrollbar and links in Firefox would be left-handed (right mouse button is your "left click"), and when I wanted to close out of Firefox, I'd instead get a context menu popup when clicking the "X" button. Ugh!

I wanted to write this blog post from within GNOME but it just wasn't possible. With different parts of my GUI using right-handed buttons and other parts using left-handed ones, I had context menus popping up when I didn't want them and none popping up when I did. After a while I thought to go into the Mouse settings and switch it back; this didn't help, instead, the parts that used to be right-handed switched to left-handed, and vice versa. It was impossible to use. I just had to painstakingly get a screenshot off the laptop and to my desktop and deal with it over there instead.

These things just lead me to believe the GNOME developers only develop for their particular workflows and don't bother testing any features that other mere mortals might like to use. All the GNOME developers are probably right-handed, and they have no idea about the left-handed bugs. All of the GNOME developers don't use transparency in their terminals, evidenced by the fact that the transparency option disappeared from GNOME 3.0 and only just recently has made a comeback (in GNOME 3.12/Fedora 20).

XFCE is going back on this laptop.

So I took a few pics with my Nexus 5 this morning, and when viewing them in the Gallery app they appeared to have not saved correctly (there was a solid dark grey placeholder image for each one I took). If I opened the Camera app and swiped over to see them, there they were. But the Camera app also popped up a toast saying "Insert an SD card to save photos."

The Nexus 5 has no SD card.

And then I probably shouldn't have rebooted my phone (the Camera was being an idiot, so I thought rebooting would fix it), cuz when the phone came back up, the Camera folder was entirely deleted. Like, it didn't show up in the Gallery, and swiping in the Camera app did nothing. /mnt/sdcard/DCIM/Camera didn't exist anymore. Take a new picture, and the Camera folder was remade but only had the one new picture in it.

So, Life Pro Tip: Android can do this sometimes. If you get those kind of symptoms, hopefully try making a backup of the Camera folder (using like Astro or Root Browser), before rebooting the phone. You'll probably still lose the newly taken/corrupted pictures but at least Android shouldn't delete all your other camera pictures.

I think the Camera app was still able to see the pictures even though they weren't saved because they were kept in some sort of temporary in-memory cache of the Camera app. Probably the best way to save them would've been to take screenshots of them from within the Camera app.

All the other folders that had pictures in them were left alone, i.e. pictures saved through Snapchat and other photo albums.

I used a recovery program to restore all the deleted photos/videos on my phone. Site I used. Some of the pictures were corrupted but I was able to get all the ones I cared about back. :) Most of the deleted files were Snapchats that I already had non-deleted copies of.

Also, the selfies that I took this morning that "caused" this whole entire mess were recovered as well, so that's double-good-news. :P One of the pics that literally broke my camera:

But, the entire rest of the Internet totally sucks. I'm using Google's DNS (because Time Warner Cable's have been absolutely useless, like making me wait several minutes just to resolve a domain name), so that's not part of the problem either. It seems also that on the general Internet, the entire network drops in pulses every 30 seconds for 5 seconds at a time. So if I'm on Reddit, and click to expand a dozen imgur links with my RES extension... all of the links take a while to load, and then all of a sudden they all load at the exact same time.

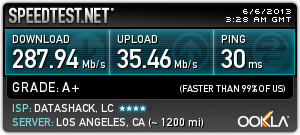

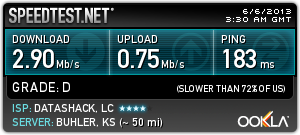

I was reasonably sure that Time Warner Cable was using traffic shaping to "put on a good show" for Speedtest.net, and throttling my network speeds for all the other sites. So that if I called and complained about my slow Internet, they'd say "go to Speedtest.net and see for yourself that you're getting your 15-20 megs down that you're paying for" when instead, they're cheating on speed tests and slowing me down on every other site in the world.

I just had to find a way to prove it. The idea I came up with was to proxy my speed test through a fast web server. My web server, though, wouldn't cut it. My server got 900 megs down, but only like 12 megs up... which I thought was absurd, because a web server's entire purpose in life is to upload web content to the end users. A server's upload speed is the bottleneck that controls your maximum download speed when downloading data off of it.

I migrated to a new web server that gets much better results on speed tests, and its upload speed is higher than my quoted download from my ISP. So, I used it to try and test whether my ISP is cheating on me.

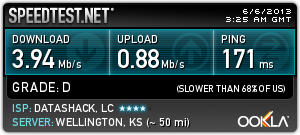

The tools I used: speedtest-cli, a command line Speedtest.net client (so I don't have to worry about trying to proxy Adobe Flash traffic, which is notoriously difficult to do), and proxychains, a Unix tool that lets you force any other program through a proxy regardless if the program wants it or not. This way I could proxy speedtest-cli to run through my web server instead of directly hitting up Speedtest.net.

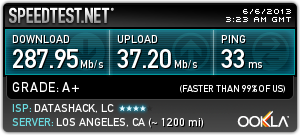

My web server is hosted in Kansas City, MO, which is a bit of a drive away from Los Angeles, so to remove any geographical bias from the equation, when I ran my speed tests from my web server, I selected Speedtest servers here in Los Angeles. This showed me that my web server was able to download and upload reasonably fast from Kansas City to LA, and that the opposite should be true as well.

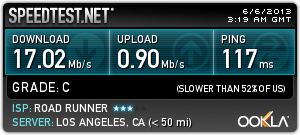

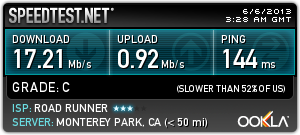

I'll let the results speak for themselves (note: I'm hosting these images here locally because I'm not sure speedtest.net hosts the results on their server forever. Clicking the images though will take you to the link that speedtest.net gave me). I did two rounds of tests for redundancy.

| PC Speedtest | Server to LA | Proxychains |

|---|---|---|

|

|

|

|

|

|

wget initially spiked to about 500 some entire kilobytes per second (about 4,000 kilobits or 3.9 megabits) before quickly dropping to between 100 and 200 KB/s (or 800-1600 kilobits/s) for the remainder of the file transfer. Overall it took 22 minutes, 46 seconds to download. Given my quoted download speed of ~17 Mbps, and my server's upload speed of 35 Mbps upload to Los Angeles, this is inexcusable.For those who like the dirty details, I copy/pasted my terminal outputs from speedtest-cli and you can see them here: speedtest-cli.txt. So you understand what you're looking at:

aerelon is my local PC.debian is my web server.proxychains ./speedtest-cli --share is me running the proxy through my web server.[kirsle@aerelon ~]$ cat .proxychains/proxychains.conf | grep -v '^#' strict_chain quiet_mode proxy_dns tcp_read_time_out 15000 tcp_connect_time_out 8000 [ProxyList] socks5 127.0.0.1 8080Where

127.0.0.1:8080 is a SOCKS5 proxy over SSH to the web server.

But, I just bought my first computer that actually had Windows 8 preinstalled on it: a Samsung Series 5 Ultrabook. It has a touchscreen and some nice features, and I wanted to dual-boot Windows 8 and Fedora Linux on it (giving Windows the smaller half of the hard drive, of course). Microsoft apparently went to great lengths to make this darn near impossible.

I came to find out, when you have Windows 8 pre-installed on your computer, it's probably the Windows 8 "Core" Edition. The only Win8 install DVD I had laying around was the Professional edition that I bought for $40. It was logical to me that I should be able to hopefully reinstall Windows 8 on the ultrabook from this DVD, maybe even using the same OEM product key that the laptop came with and not having to worry about any activation issues.

Apparently, Windows 8 PCs have their product keys "baked in" to the BIOS ROM. If you boot a Windows 8 installer of any edition, the installer looks for this product key in the ROM, and if the key is for a different edition than what the installer is intended for, it gives you some ugly error message about, "The product key you entered doesn't correspond to any of the install images. Enter a different product key." -- except it doesn't let you enter a product key anywhere, and just restarts the setup process from the beginning.

There also apparently is no Windows 8 Core ISO floating around the Internet -- not one that I would trust downloading, anyway. With the baked-in product key, the only Win8 installer that would work would be a Core edition installer, which doesn't exist. You can't install Windows 8 Pro on your computer, because the installer simply won't allow it.

Long story short, Microsoft has basically forced me to forego dual-booting completely and just install Linux on the entire hard disk. The built-in Windows 8 OS came bundled with a bunch of Samsung's crapware, and there's no way to "start fresh" with Windows 8 -- your only option of "reinstalling" is to use the Control Panel feature, which restores "to factory settings", which means your Samsung crapware is still gonna be there after the reinstall is done. And you can't install from scratch from a DVD for the aforementioned reasons.

You can even completely remove Windows 8 from your computer, maybe install Windows 7 or Linux across the whole drive, and you still won't be allowed to put Windows 8 back on there from an install DVD or USB again.

There is a workaround, though: if you make a bootable USB for Windows 8, you can add a text file to it where you specify the product key to use. For me to do this, however, I'd need to buy an additional Windows 8 Pro license, and that's not worth it to me. So, good riddance Windows 8, I don't care to have you on my ultrabook anymore, anyway.

0.0021s.