I blog about anything I find interesting, and since I have a lot of varied interests, my blog entries are kind of all over the place. You can browse my tags to sort them by topic and see which ones I frequently write about, or the archive has a complete history of my posts, dating back to 2008!

Besides my blog, I have pages for my creative projects, which are linked to on the navigation bar.

I write a lot about Linux and Android, Minecraft, and I like to rant about stuff. Generally anything that makes me curious. Also check out my Bookmarks for all sorts of cool websites about various topics I'm interested in.

For the geeks: this website respects your privacy and doesn't run any third party ads or analytics. This site speaks HTTP and doesn't require any JavaScript to work.

How to get into software developmentSomebody had asked me about this recently, and I thought I must have already written a blog post about it before, but apparently I had not!

If you (or someone you know) has been curious about how to break in to software engineering as a career (or a career change), then this post is for you. A proper college degree is NOT even necessary for most software jobs!

The basic steps I will recommend are as follows:

Now I'll go into each of these in more detail.

For my career, I have mainly worked in the "web development" space so my recommendations here are coming from that world. See the footnotes for some advice for other software development paths (e.g. videogame development).

If you don't know any programming languages yet, you should choose a popular language to be your first one, and you should prefer to choose one which has a large amount of companies that use it because that's where the jobs are at.

My current recommendations would be:

If you aren't familiar with what front-end vs. back-end is, I can describe them briefly:

It's also possible to be a "full stack developer" where you are building both back-end and front-end code, but that usually requires you to learn multiple programming languages and technology stacks. For somebody just getting started, your time would be better served to pick the one you resonate with the most and focus your attention on learning the programming languages and frameworks for that language.

Note: after you've learned one language, it is much easier to learn your second one. There are so many things they all have in common: functions, variables, and control flow are very similar across them all.

There are tons of free tutorials online that teach you how to build web apps in any of these programming languages. Just google for something like, "Build a web blog in Python" or "Build a Twitter clone in JavaScript".

Those two projects (a web blog and a Twitter clone) are very common examples that you'll find a tutorial for in any programming language. "To Do" apps are another example. These kind of projects will have you learning many real world skills, such as databases and authentication cookies, that you would use at a real job.

Most such tutorials will not only help you learn the programming language itself, but also use a popular "web framework library" built for that language. Almost nobody is programming a raw HTTP server in Python, but they will use a web framework that provides the basic structure and shape of their app and then they fill in their custom business logic on top.

For some specific recommendations of very relevant frameworks that currently see widespread use in business:

In my career at Python shops, we've always used Flask to build our apps and Vue.js has been most commonly used on front-ends at multiple companies I've worked. React.js is very popular as well.

If you aren't disciplined enough to teach yourself how to code, and you don't want to dedicate two to four years of going to University, you can look for some "coding bootcamps" in your area too. Those bootcamps will typically go for several weeks and teach you everything you need to know about web development.

I've interviewed and worked with people whose sole educational experience was via these bootcamps and they did just great on the job.

A very good way to learn a programming language is to actually have a project that you want to build.

If you just go to a programming language's website and read through the tutorials there, they can be rather boring. They have you writing trivial little programs to learn how if/else expressions work, and silly things like that. After you have picked up the bare basics, I recommend jumping in and starting on a project of your own.

That's where the aforementioned "build a blog" style of tutorial will come in. Those will give you a good structure to follow, using real world frameworks and libraries that professional developers use at work.

It doesn't really matter much what you choose for your project. If you don't already have a website, programming a web blog is a good first option. Then, you'll have a website that you can post things on and document your journey of learning how to be a better programmer!

You could also build something like a videogame, or a chatbot, or a little web page that connects to various social media APIs and provides status updates from all your friends in one place. Something to scratch your own itch. It doesn't really matter a whole lot what your project is!

The important part, though, is that you open source your project and get a GitHub account and put your source code on it for others to see. (If you don't like GitHub, you can try GitLab instead).

The point is: having source code online will already make you stand out from the crowd when you begin applying at places to work. I have interviewed hundreds of developers over the course of my career, and most of them don't have a GitHub link or anything on their resume. Those who do are already putting themselves off to a good start with me.

After you have gotten a project or two under your belt and have learned the basics of how to build a website, you can start applying to companies for a web developer position.

There are always a ton of little startup companies or other small businesses who need anybody that can code. They don't even care if you have a college degree or not, and that is where having some source code up on GitHub can be helpful, so you can point to that as evidence that you know how to code.

The larger corporations will often say they want their candidates to have a Bachelor's degree in Computer Science or similar, but, once you've gotten your foot in the door at a small startup company and keep at it for about 5 or 10 years, you'll start getting recruiter e-mails from even Google, Meta and Amazon with them wanting you to apply to them. A few years of "real world" experience is typically a lot more important than some piece of paper from a university.

The above was basically how I did it, and I'll tell you how.

I taught myself how to program since I was a young teenager. I learned how to write web pages in HTML when I was 12 (using the very same W3 Schools HTML tutorial that I linked above!), JavaScript soon after, and when I was 14 I taught myself Perl so that I could program chatbots for AOL Instant Messenger.

Throughout my teen years, I had published many Perl modules online as open source software. Some were related to my chatbot projects, some of them were Perl/Tk GUI toolkit modules, some were very niche things such as Data::ChipsChallenge which could manipulate data files for the old Windows 3.1 game "Chip's Challenge".

I got my first proper job as a software developer purely because of my open source projects. I just pointed at my CPAN page as a place for recruiters to see my work.

For my second software development job (because I was still fairly green with only a few years' experience), the job interview consisted 100% of me going through the source code of RiveScript.pm, my chatbot project I had written in Perl, and explain how I designed my code and what I would do better if I rewrote it all again.

And: nobody ever asked me about a college degree for any of these jobs. When I got my first job, I was only one year in to a college program (at ITT Technical Institute, rest in peace). I only even went to college because motivational speakers at my high school made me think it was absolutely required. I only finished out my Associate's degree (two years total) and called it quits, only because I wanted to have something to show for my student loan debt at least. But nobody in my entire career has ever asked or wanted to verify my college degree.

Way back when I was interviewing to get my first couple of software dev jobs, I was naturally pretty anxious and worried that I wouldn't do well enough to pass those interviews. But after I got in and then it was my turn to conduct the interviews, and got to see how it looks from the other side of the table, I wonder what I was ever so worried about.

Throughout my career, I have interviewed hundreds of software developers. Many of them were fresh college graduates who went to school for computer science, but had no "real world" coding experience. Others had come from other companies and had years or decades of experience.

A thing that surprised me is that so many people who come in for an interview, just don't know how to program. I once saw this article, "Why Can't Programmers... Program?", which may be the origin story of the "Fizz Buzz" meme of recent decades.

But, I've found it to be fairly accurate. At a software dev interview, we'd often ask the candidate to write out a quick "puzzle program" to test their logic and coding ability, and for most candidates, it was like pulling teeth. They would usually get through it, with some help, over the span of 20 minutes or so.

Usually, it was the fresh college graduates who struggle the most. You'd think, being fresh out of school, their knowledge of programming would be sharp. But, in class they learned a lot of esoteric problems and rote memorization of algorithms, so when faced with a new problem they haven't specifically seen and practiced before, they freeze. Whereas programmers who had a whole career already of real world experience at various companies, they fared a lot better on these kind of questions.

The point I'm wanting to make is: if you actually know how to program, even if you're nervous at the interview, it is a night and day difference and you will already stand out greatly compared to most of the candidates who walk through that door. Having your own "real world" projects you programmed for fun, and put on GitHub, puts you at a great advantage even above the fresh college graduates who spent the last 4 years learning about computers.

When I see a GitHub link on someone's resume, it's a huge green flag for me. In the time leading up to the interview, I'll check out their source code and prepare some questions about it. If you can walk me through your projects and explain how they work, you're basically going to pass the interview. It may not seem like much, but you would be a breath of fresh air for the interviewer after they just watched the 10 previous candidates struggle their way through a "Fizz Buzz" algorithm.

This one is a valid question and the answer is just that "we don't know."

The above advice all worked out great for me and others I met during my career. The software industry bubble may be finally about to pop soon. The industry is genuinely concerned that "junior developers" may start to go extinct, as companies race to replace them with A.I. and only retain their "senior developers" on salary. Eventually, those senior developers will retire or die off, and nobody will know how to code anymore because A.I. has made everyone dumb and companies won't be able to hire new senior developers to replace them.

However: I say learning how to program is a valuable skill to have no matter what you do for your career. Even if you don't get a job as a software developer, being able to write little scripts and programs to automate the tedious parts of your job will continue to pay dividends for your entire lifetime.

While the above advice was especially for "web development" jobs, much of it applies more broadly to any kind of software development.

If you want to get into videogame development for example, C Sharp (C#) is a useful language to learn because of its prominence in the popular game engine Unity. Those are also useful skills if you want to program Virtual Reality (VR) applications.

If you are interested in mobile apps, the answer is straightforward there: Swift for iOS and Kotlin for Android, and there are many mobile app tutorials out there to get you started.

If you want to get into "systems programming" such as to work with microcontrollers and embedded systems, C is still king and will never be going away, and Rust is a popular up-and-coming language with many new jobs opening up as it can fulfill many of the same roles that C can, but in a more (memory) safe way. Many companies are rewriting their systems in Rust lately, and Rust has a massive library of modules available for doing anything from web development to videogames to desktop/mobile applications to embedded systems development.

And the rest of my advice above still applies: pick a language, check the job market if that's a concern, and then pick a project.

This may be a hot take, but in 2025 my favorite web browser is still (and has been for decades) Mozilla Firefox and I'll tell you why.

Disclaimer: I am not affiliated with Mozilla in any way, I have never worked for them or taken any money from them. I just like their web browser and have a lot of thoughts about the Web in general, as the below post will make abundantly clear. I only started thinking about writing this post after a friend asked me what my preferred browser is recently and why I said "Firefox."

In 2025, there are only about three different types of web browsers:

In terms of market share, Google Chrome currently corners 66% of the entire market and when you add the other Chromium-based browsers, such as Microsoft Edge at 5.18%, and smaller derivatives such as Brave, the Chromium Blink engine has 79% of the global browser engine market share.

Safari has a decent slice of their own, at 17.59%, thanks in large part to the way that iPhones and iPads mandate the use of the Safari engine to power all web browsers and their not insignificant market of Macbooks which have Safari as the default browser. Safari, however, does not run on Windows or Linux so while Safari is a nice browser with a good chunk of the market, it is isolated only to Apple devices whereas Firefox can run everywhere.

But Firefox only has 2.51% of the market and its engine, Gecko, could be at risk of going extinct one day.

Why is the Chromium monopoly worrisome? Because it is largely governed by Google, who is an ad company and has somewhat of a conflict of interest when designing web standards and Google's decisions in Chromium will have a ripple effect across all downstream derivatives.

I also lived through the "browser wars" era when Internet Explorer 6.0 clung to life for way too long, and had way too large of a market share, and it wasn't keeping up with the times and making life difficult for web developers who couldn't use the shiny new features that Firefox and Chrome were bringing to the Web because we had to maintain compatibility with Internet Explorer 6. A Chromium monopoly would again lead to web developers getting complacent, and designing their websites only for Chromium and not caring to test them in Firefox because they don't see the point.

I continue to use and support Firefox so that when my entry appears on a website's analytics, I want web developers to know that Firefox users still exist and they should continue to support it on their websites, lest we all just give up and let Chromium dominate the Web.

Ha.

The vast majority of Chromium developers are full-time Google employees. The Chromium project sees hundreds of commits daily with mainly Google engineers working on the codebase.

Yes, Chromium is open source and theoretically if Google makes an unpopular decision that benefits only their own interest, other downstream derivatives of Chromium could patch those changes out and undo them. For example, when Manifest v3 came out and crippled the ability of ad-block extensions which strongly benefitted only Google's interests, the other downstream Chromium browsers could simply revert those changes and maintain support for the old, working, ad block extensions.

For a time.

I'll tell you how it goes when you fork a popular project such as Chromium. If you're, say, Microsoft Edge, you take a snapshot of the Chromium codebase and then you modify it to add or remove features and you release your derivative. But then, as time goes on, you would need to rebase your code on the upstream Chromium so you can take advantage of all the security fixes, features and bugs that Google has fixed on the upstream codebase.

Web browsers are massively complicated programs and the Web is a fast moving target. Every day there are new vulnerabilities discovered that companies like Google and Mozilla need to fix in their browsers to keep them safe and secure. Google is constantly working on Chromium, and if you have a browser fork based on Chromium, you need to keep track of the upstream project and rebase your code on their latest changes to keep up.

Maintaining a web browser is a full-time job that requires hundreds or thousands of engineers. Even Microsoft could not keep up: they stopped trying to maintain Internet Explorer or their first version of Edge (which was based on IE), and threw in the towel and decided to make a Chromium based browser so that they could leverage the engineering talent of Google in providing a solid base for them to build on top of.

So, Google does something unpopular like Manifest V3 and early on, it's a simple issue to just revert those changes in Chromium when you rebase your browser. But over months or years, Chromium sees a lot more code changes, many of those changes touching the same parts of code that Manifest V3 touched. Suddenly, when you rebase your project on Chromium you get "merge conflicts" because parts of the code that you have customized (like, say, when you removed Manifest V3 before) can't be reconciled with the latest state of the Chromium code. So you need to figure out what the merge conflict is about and how to manually resolve it.

These little merge conflicts keep coming up, and the burden of keeping your fork of Chromium up to date while avoiding all the misfeatures you don't want only grows over time. Your browser team is miniscule compared to the headcount of the Google engineers who are churning out hundreds of commits per day to Chromium.

Eventually, all of the downstream Chromium browsers will feel the effects of whatever it is that Google wants to do with their browser engine. Web browsers are expensive to maintain and very few people other than Google have the budget or manpower to manage them. Again, even Microsoft could not keep up with their own browser engine.

There exist a few downstream Firefox derivatives too, such as LibreWolf or Zen.

Some of them customize Firefox to have better privacy features or add-ons by default, or they exist only because of some controversies of Mozilla that the developers decided were worth creating a Firefox fork over.

I don't use these derivatives for the following reasons:

See what I said above about Chromium: it is massively expensive to maintain a web browser, and if Mozilla is a small team compared to Google, these Firefox derivative maintainers are even smaller.

One of Mozilla's largest sources of income actually comes from Google: they pay Mozilla to keep Google as the default search engine. Google does this because it keeps the regulators at bay, so Google avoids getting an antitrust monopoly lawsuit by being able to say "but Firefox exists! And look, we support them financially, too!"

If, god forbid, Mozilla decides to give up on Firefox or is no longer able to maintain it, Firefox and all downstream derivatives will be dead, the Gecko engine would not keep up with security fixes for long and these tiny teams who maintain Firefox forks won't be able to keep going.

So, I stick with Firefox proper because that's where Mozilla is.

Of course, Mozilla and Firefox are not without their own controversies. I said at the top of the post that Mozilla is the least abusive browser vendor, not that they have never done anything to stir up bad press among the community.

However, I do always find it ironic when I see comment threads on Reddit about people dunking on Mozilla about something that is relatively minor, and then they go running arms open back to Google Chrome who is abusing them 100X more severely, but I digress.

Some of the most common things I hear people rail on Mozilla about include:

I did a bit of research just now to dig up what the Mozilla controversies were, and the above are just about it. Some people were unhappy about Mozilla commentating on politics before. Again though I find it funny when people will crucify Mozilla over something small and then run to Google Chrome with Google's whole dedicated wiki page of controversies or to browsers like Brave with their own shady dealings and "sorry we got caught" history of controversies.

The only way you get an ethically spotless web browser, I suppose, is to go with a purely volunteer-driven, open source, non-corporate one like those Firefox downstream browsers. But, again, those browsers will not exist if Mozilla ever stops developing Firefox so this is why I just stick to Firefox and have for decades, ever since they wrested some market share away from Internet Explorer 6.

Now, feel free to fight it out in the comments but this is my hot take and I'm sticking to it. 😎

I had originally written this post on my NSFW blog back around May of this year. With the recent surge of interest in Bluesky, I thought I would copy it over here to my tech blog to give it some wider visibility.

Bluesky is a very interesting app, and it is different in some important ways to Twitter and the others that came before it.

Bluesky will not enshittify over time like its predecessors have. On the surface, it appears to be "just another Twitter" but the real interesting technology is happening under the hood. Bluesky is being developed in such a way that Bluesky (the corporation) can let go of it and allow it to be distributed as an open source, open standard network that is not under the control of a single corporation who could one day destroy it.

Below I will paste my original blog post with some of the NSFW paragraphs edited out for the different audience that I have on Kirsle.net.

I've been on Bluesky for about 4 months now and have learned a bit more about how it works and I find it rather interesting, so I thought I'd write about it and give some advice on how to get started and especially how to find your fellow nudists & exhibitionists there. So, in a similar spirit to my Getting started with Mastodon post, I'll write about Bluesky especially for an audience of people who aren't already using it. And even if you are already on Bluesky, maybe you'll learn something cool from this post anyway!

Compared to Mastodon, getting started on Bluesky is fairly simple right now: there is only one front-end web server available so far, at https://bsky.app/ where you can go and sign up in a familiar way like any other site you've used. Bluesky intends to support federated instances in the future (and they've made some good progress on that, so far), which I'll get into below, but for now at least you can avoid the choice paralysis of needing to find a server to sign up on like you have with Mastodon.

In this post I'll tell you about how content discovery works, how adult content is tagged and how to find your people to follow. And I'll also go into what's especially interesting about the way that Bluesky works as compared to Mastodon and the rest of the related fediverse.

First, let's address the elephant in the room. On Mastodon, the general sentiment about Bluesky is one of negative skepticism, and many people on Mastodon talk shit about Bluesky and compare it to Twitter. After Elon Musk took over Twitter and started making a lot of people very upset, a lot of them flocked over to Bluesky and the folks on Mastodon say things like "you're moving from one platform created by a billionaire, to another platform created by a billionaire, and you think this time it's going to be different? Have you learned nothing?"

Well, to that I say: this time it absolutely can be different, because Bluesky is not just another centralized website controlled by a corporation in the way that Twitter (and Facebook, Instagram, etc.) are. The key technology behind Bluesky is called the AT Protocol which is being developed and released as an open source standard for federated web services, and Bluesky is only one app that runs on the AT Protocol. The thing with an open standard is that everybody can participate in it, and people can write their own custom apps that use it, and they can all inter-operate with all the other apps using it, and once that's out in the wild there is no controlling it or governing it from the top down.

Mastodon is a good example of this: the server-to-server language behind the scenes on Mastodon is called ActivityPub - it's what allows Mastodon servers to be hosted by many different organizations or individuals and still allow you to follow and comment on people anywhere else. And Mastodon is far from the only ActivityPub app: Pixelfed and PeerTube are just a couple of other examples. So, despite the origin of Bluesky having been started by a billionaire as an internal project at Twitter, the technology they are building to run it on is to be a distributed open standard.

The problems we faced with centralized sites like Twitter, where a change in ownership or a pivot in business strategy left all their users held hostage, aren't nearly as possible with federated services like Mastodon because you can simply move to a server run by somebody you trust better. There's no way it can be enshittified from the top-down in the way a centralized platform will be.

Now, it is fair to remain skeptical of Bluesky until full federation and open-source self-hosting becomes possible. They are actively developing the AT Protocol and, while third-party developers are already writing code to support it, the system is not fully open and ready yet. The down side of building an open standard is that, once it's out there, you've lost control of the direction it will evolve. It's important to design it the best as you can in the beginning, which is why Bluesky has been going at such a slow pace. But, they've been continually making good progress: it is now possible to self-host your data on your own server and complete data migration of your account to your own server is working (and is much more fully featured than a Mastodon account migration, which I'll get more into below).

So, let's get into the user experience of Bluesky. You've created a new account and have a lot of questions, like: how do I find people to follow? How is "adult content" tagged and discovered?

One thing that I noticed pretty quickly was that hashtags don't exist on Bluesky (though I've seen some screenshots to suggest this feature is 'coming soon', edit: we have them now). I would post some of my nudes, though, and get followed by random people and I didn't know how they were even discovering my page, especially if my recent post hadn't yet been reblogged by any of my followers!

Instead of hashtags, Bluesky has a featured called "feeds." When you sign up an account, they'll suggest a few feeds for you to discover, themed around various topics. I followed a few feeds with names like: Science, Astronomy, Developers, and Fungi Friends.

I don't know how feeds are created, but it seems that a developer somewhere had to write some code to create a feed. You don't need to worry about that, though: if you find the feed you can simply follow it/add it to your home timeline and be able to see the posts that the feed has discovered.

[redacted] Here are a few NSFW/adult content-oriented feeds I have found, if you're an exhibitionist like me and want to find your people there:

You may need to go to your "Settings -> Moderation -> Content Filters" to enable adult content if you like to see that stuff. 😈

Sharing a post on Bluesky should be very familiar compared to Twitter or anything else you've used. You can write some text and attach some pictures.

A couple of notable aspects of sharing a post that I want to highlight are:

I highly recommend writing alt texts because: those "Feeds" that help others discover your post will see your alt texts as well. When I wondered how people were discovering my posts without any hashtags, that was how. You don't need to spam a bunch of hashtags in your posts like you did on Twitter, you can just graphically describe what is shown in your pictures and be picked up on the relevant feeds for people who want to see that stuff.

And also: describing nude pictures in writing is just a very fun exercise in itself. 😈

I should also share some of the current lack of features or quirks about Bluesky that you may want to know about here.

Issues like these seem to be on the radar of the Bluesky/AT Protocol developers and may be improved upon in the future. For me personally, they are non-issues: I've always treated my Twitter (etc.) page as fully public, I've rarely ever had to block anybody, and for DMs you have always been better off taking your conversations to proper chat platforms anyway.

I think I should elaborate on that last point: Mastodon has Direct Message support but their web interface is clear that DMs on Mastodon are not secure. They say that because a DM needs to be synced to the other server your friend is on, and your server admins on both sides do have the technical capability to look in their database and read your DMs. New users on Mastodon are surprised about this, but guess what: this has always been the status quo even on centralized sites like Twitter. Whoever runs the server and database has always been able to read your DMs if they so chose to, unless your DMs are explicitly "end-to-end encrypted," which they usually have not been.

I don't know how Bluesky will implement DMs, but they seem to share this concern and (I suspect) they will bring DMs only when they are able to end-to-end encrypt them properly, which is a hard problem to solve for website-based applications, for reasons I won't get into here.

But anyway, if you're one who liked to run a "private" page where almost nobody but your friends can see your posts, etc., I thought it fair to point out how Bluesky currently works in that regard.

Mastodon and ActivityPub more generally are interesting, but I wrote before about some of the pain points and issues I have with it.

The good news is, Bluesky and the AT Protocol address some of the biggest pain points in ways that I find very interesting. In fact, the whole reason that they are developing the AT Protocol to begin with is because they found ActivityPub to have fundamental limitations that were at odds to their vision of how much better an open, federated social network could be.

A couple of examples in particular of the pain points with Mastodon include:

On Mastodon, if you no longer like your current server and want to move, you can "migrate" your account to a new place. However, this doesn't work the way that most people would hope: only your followers are moved over, but not your posts, and not the list of people who you follow.

Migrating to a new Mastodon host is basically like starting over with a fresh new account. The only nice thing that Mastodon migration does for you is to automatically move your followers, so you don't need to tell them about your new profile and they'll update to follow it automatically. Usually. Your mileage may vary with non-Mastodon software and you still might lose some followers in the move.

One of my #1 top favorite features of sites like Tumblr or Twitter was how I could write a bunch of posts over many years, and at any time, somebody who newly discovers my page was able to scroll all the way back on my timeline, if they wanted, and easily see everything I had ever posted before.

On Mastodon, this usually doesn't work very well: following somebody on Mastodon is more like subscribing to their newsletter. Your local server will show you their new posts going forward from the moment you began following them, but chances are very good that your local server does not know about their old posts from before. The only time their older posts may be available, is if your local server already knew about them before (e.g., because somebody else on your server also follows them, and so has been bringing in their posts). This is a fundamental problem with ActivityPub more generally: it is an "inbox/outbox" based protocol and there lacks a standard method to deeply retrieve historical posts from another server.

On Mastodon, it is a common experience that somebody follows you and you visit their profile, and only see maybe a couple of posts, and a notice that says "Older posts from this user are not available, click here to go to their Mastodon server to see their full page." That would be okay, but if that user is primarily NSFW and all their posts are nudity/porn, it becomes very tedious to scroll back through their page: you need to click to reveal every single blurred picture, because you probably don't have a local Mastodon account on their server, so you can't have an "automatically show me NSFW content" setting there. Speaking for me personally, I never dig very deep into somebody's page on Mastodon if I need to put up with all of that.

As I mentioned on my Fediverse pain points post, moderation on Mastodon is handled at the server level: your local server administrator makes decisions about how to moderate content coming from other servers, in ways that can harm their local users if these decisions are not ones that you would agree with. And as an end user, your only recourse then is to migrate your account to some other Mastodon server, run by somebody you like better, or to self-host your own Mastodon server where you get to decide on these things.

In an ideal world, this would be OK: since Mastodon is open source and anybody can run a server, sometimes those servers will be home to terrible people, such as racists or transphobes or general assholes. Your server admin being able to wholesale ban these servers keeps you and the rest of your users safe. But sometimes, server bans are put in for absolutely stupid reasons, personal beef between two server admins, over drama that you don't really care about, and now because a server block was put in place, you are cut off from your friends who had accounts on the other server.

The only way you avoid getting caught as collateral damage in moderator drama like this, is to self-host your own personal server yourself, where you alone get to make these moderator decisions, but that comes with its own helping of downsides: needing to have the technical skills to run a server correctly, not having a local timeline of like-minded users to discover, etc.

Recently, Bluesky has open sourced their Personal Data Server code which allows you to self-host your data on your own machine, instead of hosting it on Bluesky-managed servers. Along with the PDS, you are able to migrate your account from Bluesky's servers onto your own.

Bluesky migrations are full data migrations. They don't just move your followers like Mastodon does, it will deeply migrate all of your data: your complete timeline of posts and their attached images, and everything. This is what Mastodon users hoped for when they saw there was an ability to migrate to other servers when needed, but Mastodon fell far short of user expectations here.

And, Bluesky solves the timeline problem here too. My account is all hosted on the main Bluesky server, but I follow some self-hosting enthusiasts who've already set up their own PDS servers, and I can view their profile page within Bluesky's (bsky.app) web interface, and I can scroll all the way back to the beginning of their timelines if I want to, and I don't have to click to reveal all their NSFW images because I'm viewing them from the server my account is on, where I have opted-in to see adult content without blurring. So again here, Bluesky's style of federation does what I would hope for and expect Mastodon to do, but which Mastodon does not do.

And finally, Bluesky puts moderator decisions in the hands of users where it belongs. Admittedly, moderating 'the entire Internet' is a big job and most people don't really want this responsibility, so Bluesky has "moderator lists" where you can choose to follow somebody else's list if you like theirs. So if you want to keep yourself shielded from terrible people, you can follow somebody's moderation list where they do that job for you. But if they make decisions you don't like, or they cut you off from your friends on accident, you can fix that yourself by changing which lists you follow. You avoid inter-admin drama over silly nonsense you don't care about that way. For a bit more reading, here is a recent Bluesky blog post about moderation.

Identity regards your username or how people discover you in the app.

On Mastodon, your identity is tied closely to the Mastodon server you signed up on. If you signed up on the server "mstdn.social" your Mastodon handle looks like "@yourname@mstdn.social". If I want somebody to follow me on Mastodon, I give them that full identity handle and they paste it into their search bar to bring me up.

The down side comes when I want to migrate to another server: my identity will change! My first Mastodon profile was @redacted@mastodon.social because I signed up on the mastodon.social server. I've migrated a few times since then, which always gave me a new identity. I was able to move (most) of my followers each time, so they didn't really need to care that much, but I did need to update my blog and find/replace all the places I linked to my profile and update to the new location.

On Bluesky, identity is de-coupled from the server you signed up on. They rely on the tried and true Domain Name System for identity. For most Bluesky users, they have names that look like soandso.bsky.social where they have the "bsky.social" domain in their handle. This may be OK for most people who don't own their own custom domain names, but you don't have to have a .bsky.social handle. My handle on Bluesky is my blog's domain name: @kirsle.net. If you have your own domain name, you can use it as your Bluesky handle, and then you never need to worry about changing it, even if you migrated to a different Bluesky server in the future!

And even if you have a .bsky.social handle now: you could keep that handle even if you migrate servers, too. But if you were interested in self-hosting your data, getting your own domain name is the most sure way to make sure you're always in charge of your identity.

Should you check out Bluesky, my profile link is at https://bsky.app/profile/kirsle.net and you can give me a follow.

I think that'll do it for today, until I have any more exciting news about Bluesky (probably when they make further advancements towards allowing open federation and full self-hosting). When the dust settles with all of that, I plan on setting up my own Bluesky servers for myself! 😎

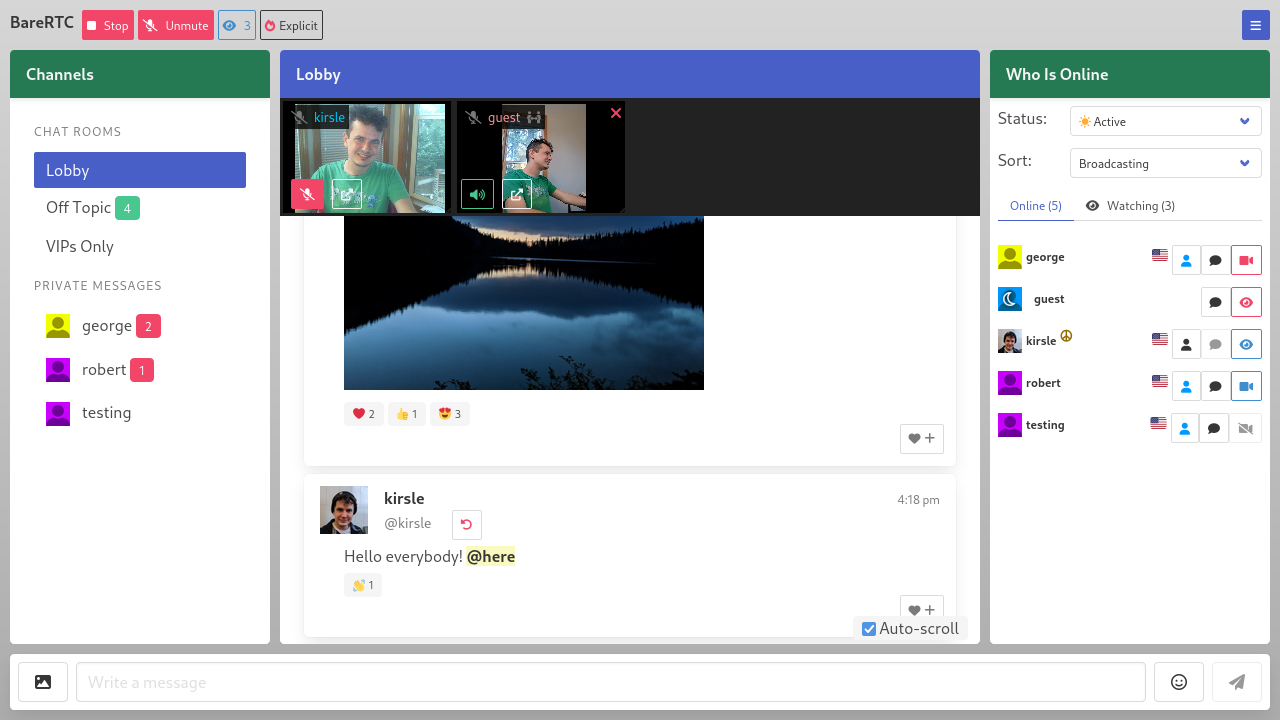

A while back (February 2023) I built an open source webcam chat room for one of my side project websites.

It was very much designed to resemble those classic, old school Adobe Flash based webcam chat rooms: where it is first and foremost a text based chat (with public channels and private messages), and where some people could go on webcam and they could be watched by other people in the chat room, in an asynchronous manner.

It didn't take terribly long to get the chat room basically up and running, and working well, for browsers such as Chrome and Firefox - but it was a whole other story to get it to work as well for Apple's web browsers: Safari, and iPads and iPhones.

This blog post will recount the last ~year and change of efforts to get Safari to behave nicely with my chat room, and the challenges faced and lessons learned along the way.

First, it will be helpful to describe the basic features of the chat room and how I designed it to work, to set some context to the Safari specific challenges I faced along the way.

There are a few other features on top of these, but the above are the basic fundamentals that are relevant to this story about getting this all to work on Safari.

The additional features include:

The underlying web browser standard that allows videos to be shared at all is called WebRTC, which stands for "Web Real Time Communication." It is supported in all major web browsers, including Safari, but the devil is in the details.

WebRTC basically enables two web browsers to connect to each other, directly peer-to-peer, and exchange data (usually, video and audio data but any kind of data is possible). It can get two browsers to connect even when both sides of the connection are behind firewalls or behind a NAT (as 99% of regular home Internet users are).

For my chat room, it means that webcam data is sent directly between the chat users and none of it needs to pass through my server (which could be expensive for me to pay for all that bandwidth!).

It's a rather complex, and poorly documented, system but for the sake of this blog post, I will try and distill it down to its bare essence. The following is massively simplified, but if curious to dive in to the weeds on it, the best resource I found online is this free e-book: WebRTC for the Curious.

When two users are logged on to my chat room, and one wants to open the other's camera, the basic ingredients that make the WebRTC magic work includes:

The signaling server in WebRTC is much simpler than it sounds: it is really just any server you write which is capable of passing messages along, back and forth between the two parties who want to connect. It could be a WebSocket server, it could be based on AJAX requests to a PHP script, it could even be printed out on a post card and delivered by snail mail (though that way would take the longest).

For my chat room's use case, I already had a signaling server to use: my WebSockets server that drives the rest of the chat room.

The server side of the chat room was a WebSockets server, where users would post their chat messages and the server would broadcast those back out to everybody else, and the server would push "Who's Online" list updates, etc. - so I just added support for this same WebSockets server to allow forwarding WebRTC negotiation messages between the two users.

There are a couple of important terms used in WebRTC that are not super intuitive at first glance.

The two parties of a WebRTC connection are named the Offerer and the Answerer.

Both the Offerer and the Answerer are able to attach data channels to their side of the connection. Most obviously, the Answerer will attach their active webcam feed to the connection, so that the Offerer (who wanted to watch it) is able to receive it and show it on their screen.

The Offerer is also able to attach their own camera to that opening connection, as well, and their video data will be received automatically on the Answerer's side once the connection is established. But, more on that below.

So, going back to the original design goals of my chat room above, I wanted video sharing to be "asynchronous": it must be possible for Alice, who is not sharing her video, to be able to watch Bob's video in a one-directional manner.

The first interesting thing I learned about WebRTC was that this initially was not working!

So the conundrum at first, was this: I wanted Alice to be able to receive video, without sharing her own video.

I found that I could do this by setting these parameters on the initial offer that she creates:

pc.createOffer({

offerToReceiveVideo: true,

offerToReceiveAudio: true,

});

Then Alice will offer to receive video/audio channels despite not sharing any herself, and this worked OK.

But, I came to find out that this did not work with Safari, but only for Chrome and Firefox!

I learned that there were actually two major iterations of the WebRTC API, and the above hack was only supported by the old, legacy version. Chrome and Firefox were there for that version, so they still support the legacy option, but Safari came later to the game and Safari only implemented the modern WebRTC API, which caused me some problems that I'll get into below.

So, in February 2023 I officially launched my chat room and it worked perfectly on Firefox, Google Chrome, and every other Chromium based browser in the world (such as MS Edge, Opera, Brave, etc.) - asynchronous webcam connections were working fine, people were able to watch a webcam without needing to share a webcam, because Firefox and Chromium supported the legacy WebRTC API where the above findings were all supported and working well.

But then, there was Safari.

Safari showed a handful of weird quirks, differences and limitations compared to Chrome and Firefox, and the worst part about trying to debug any of this, was that I did not own any Apple device on which I could test Safari and see about getting it to work. All I could do was read online (WebRTC stuff is poorly documented, and there's a lot of inaccurate and outdated information online), blindly try a couple of things, and ask some of my Apple-using friends to test once in a while to see if anything worked.

Slowly, I made some progress here and there and I'll describe what I found.

The first problem with Safari wasn't even about WebRTC yet! Safari did not like my WebSockets server for my chat room.

What I saw when a Safari user tried to connect was: they would connect to the WebSockets server, send their "has entered the room" message, and the chat server would send Safari all the welcome messages (listing the rules of the chat room, etc.), and it would send Safari the "Who's Online" list of current chatters, and... Safari would immediately close the connection and disconnect.

Only to try and reconnect a few seconds later (since the chat web page was programmed to retry the connection a few times). The rest of the chatters online would see the Safari user join/leave, join/leave, join/leave before their chat page gave up trying to connect.

The resolution to this problem turned out to be: Safari did not support compression for WebSockets. The WebSockets library I was using had compression enabled by default. Through some experimentation, I found that if I removed all the server welcome messages and needless "spam", that Safari was able to connect and stay logged on -- however, if I sent a 'long' chat message (of only 500 characters or so), it would cause Safari to disconnect.

The root cause came down to: Safari didn't support WebSocket compression, so I needed to disable compression and then Safari could log on and hang out fine.

So, finally on to the WebRTC parts.

Safari browsers were able to log on to chat now, but the WebRTC stuff simply was not working at all. The Safari user was able to activate their webcam, and they could see their own local video feed on their page, but this part didn't involve WebRTC yet (it was just the Web Media API, accessing their webcam and displaying it in a <video> element on the page). But in my chat room, the Safari user was able to tell the server: "my webcam is on!", and other users would see a clickable video button on the Who List, but when they tried to connect to watch it, nothing happened.

So, as touched on above, WebRTC is an old standard and it had actually gone through two major revisions. Chrome and Firefox were there for both, and they continue to support both versions, but Safari was newer to the game and they only implemented the modern version.

The biggest difference between the old and new API is that functions changed from "callback based" into "promise based", e.g.:

// Old API would have callback functions sent as parameters

pc.setLocalDescription(description, onSuccess, onFailure);

// New API moved to use Promises (".then functions") instead of callback functions

pc.setLocalDescription(description).then(onSuccess).catch(onFailure);

The WebRTC stuff for Safari wasn't working because I needed to change these function calls to be Promise-based instead of the legacy callback function style.

By updating to the modern WebRTC API, Safari browsers could sometimes get cameras to connect, but only under some very precise circumstances:

This was rather inconvenient and confusing to users, though: the Safari user was never able to passively watch somebody else's camera without their own camera being on, but even when they turned their camera on first, they could only open about half of the other cameras on chat (only the users who wanted to auto-open Safari's camera in return).

This was due to a couple of fundamental issues:

offerToReceiveVideo: true option), which Safari did not support.

For a while, this was the status quo. Users on an iPad or iPhone were encouraged to try switching to a laptop or desktop PC and to use a browser other than Safari if they could.

There was another bug on my chat room at this point, too: the Safari browser had to be the one to initiate the WebRTC connection for anything to work at all. If somebody else were to click to view Safari's camera, nothing would happen and the connection attempt would time out and show an error.

This one, I found out later, was due to the same "callback-based vs. promise-based" API for WebRTC: I had missed a spot before! The code path where Safari is the answerer and it tries to respond with its SDP message was using the legacy API and so wasn't doing anything, and not giving any error messages to the console either!

At this stage, I still had no access to an Apple device to actually test on, so the best I could do was read outdated and inaccurate information online. It seems the subset of software developers who actually work with WebRTC at this low of a level are exceedingly rare (and are all employed by large shops like Zoom who make heavy use of this stuff).

I had found this amazing resource called Guide to WebRTC with Safari in the Wild which documented a lot of Safari's unique quirks regarding WebRTC.

A point I read there was that Safari only supported two-way video calls, where both sides of the connection are needing to exchange video. I thought this would be a hard blocker for me, at the end of the day, and would fly in the face of my "asynchronous webcam support" I wanted of my chat room.

So the above quirky limitations: where Safari needed to have its own camera running, and it needed to attach it on the outgoing WebRTC offer, seemed to be unmoveable truths that I would have to just live with.

And indeed: since Safari didn't support offerToReceiveVideo: true to set up a receive-only video channel, and there was no documentation on what the modern alternative to that option should be, this was seeming to be the case.

But, it turned out even that was outdated misinformation!

Seeing what Safari's limitations appeared to be, in my chat room I attempted a sort of hack, that I called "Apple compatibility mode".

It seemed that the only way Safari could receive video, was to offer its own video on the WebRTC connection. But I wanted Safari to at least, be able to passively watch somebody's camera without needing to send its own video to them too. But if Safari pushed its video on the connection, it would auto-open on the other person's screen!

My hacky solution was to do this:

But, this is obviously wasteful of everyone's bandwidth, to have Safari stream video out that is just being ignored. So the chat room would only enable this behavior if it detected you were using a Safari browser, or were on an iPad or iPhone, so at least not everybody was sending video wastefully all the time.

Recently, I broke my old laptop on accident when I spilled a full cup of coffee over its keyboard, and when weighing my options for a replacement PC, I decided to go with a modern Macbook Air with the Apple Silicon M3 chip.

It's my first Apple device in a very long time, and I figured I would have some valid use cases for it now:

The first bug that I root caused and fixed was the one I mentioned just above: when somebody else was trying to connect in to Safari, it wasn't responding. With that bug resolved, I was getting 99% to where I wanted to be with Safari support on my chat room:

The only remaining, unfortunate limitation was: the Safari user always had to have its local webcam shared before it could connect in any direction, because I still didn't know how to set up a receive-only video connection without offering up a video to begin with. This was the last unique quirk that didn't apply to Firefox or Chrome users on chat.

So, the other day I sat down to properly debug this and get it all working.

I had to find this out from a thorough Google search and landing on a Reddit comment thread where somebody was asking about this question: since the offerToReceiveVideo option was removed from the legacy API and no alternative is documented in the new API, how do you get the WebRTC offerer to request video channels be opened without attaching a video itself?

It turns out the solution is to add what are called "receive-only transceiver" channels to your WebRTC offer.

// So instead of calling addTrack() and attaching a local video:

stream.getTracks().forEach(track => {

pc.addTrack(track);

});

// You instead add receive-only transceivers:

pc.addTransceiver('video', { direction: 'recvonly' });

pc.addTransceiver('audio', { direction: 'recvonly' });

And now: Safari, while not sharing its own video, is able to open somebody else's camera and receive video in a receive-only fashion!

At this point, Safari browsers were behaving perfectly well like Chrome and Firefox were. I also no longer needed that "Apple compatibility mode" hack I mentioned earlier: Safari doesn't need to superfluously force its own video to be sent on the offer, since it can attach a receive-only transciever instead and receive your video normally.

There were really only two quirks about Safari at the end of the day:

And that second bit ties into the first: the only way I knew initially to get a receive-only video connection was to use the legacy offerToReceiveVideo option which isn't supported in the new API.

And even in Mozilla's MDN docs about createOffer, they point out that offerToReceiveVideo is deprecated but they don't tell you what the new solution is!

One of the more annoying aspects of this Safari problem had been, that iPad and iPhone users have no choice in their web browser engine.

For every other device, I can tell people: switch to Chrome or Firefox, and the chat works perfectly and webcams connect fine! But this advice doesn't apply to iPads and iPhones, because on iOS, Apple requires that every mobile web browser is actually just Safari under the hood. Chrome and Firefox for iPad are just custom skins around Safari, and they share all its same quirks.

And this is fundamentally because Apple is scared shitless about Progressive Web Apps and how they might compete with their native App Store. Apple makes sure that Safari has limited support for PWAs, and they do not want Google or Mozilla to come along and do it better than them, either. So they enforce that every web browser for iPad or iPhone must use the Safari engine under the hood.

Recently, the EU is putting pressure on Apple about this, and will be forcing them to allow competing web browser engines on their platform (as well as allowing for third-party app stores, and sideloading of apps). I was hopeful that this meant I could just wait this problem out: eventually, Chrome and Firefox can bring their proper engines to iPad and I can tell my users to just switch browsers.

But, Apple isn't going peacefully with this and they'll be playing games with the EU, like: third-party app stores and sideloading will be available only to EU citizens but not the rest of the world. And, if Apple will be forced to allow Chrome and Firefox on, Apple is more keen to take away Progressive Web App support entirely from their platform: they don't want a better browser to out-compete them, so they'd rather cripple their own PWA support and make sure nobody can do so. It seems they may have walked back that decision, but this story is still unfolding so we'll see how it goes.

At any rate: since I figured out Safari's flavor of WebRTC and got it all working anyway, this part of it is a moot point, but I include this section of the post because it was very relevant to my ordeal of the past year or so working on this problem.

Early on with this ordeal, I was thinking that Safari's implementation of WebRTC was quirky and contrarian just because they had different goals or ideas about WebRTC. For example, the seeming "two-way video calls only" requirement appeared to me like a lack of imagination on Apple's part: like they only envisioned FaceTime style, one-on-one video calls (or maybe group calls, Zoom style, where every camera is enabled), and that use cases such as receive-only or send-only video channels were just not supported for unknowable reasons.

But, having gotten to the bottom of it, it turns out that actually Safari was following the upstream WebRTC standard to a tee. They weren't there for the legacy WebRTC API like Firefox and Chrome were, so they had never implemented the legacy API; by the time Safari got on board, the modern API was out and that's what they went with.

The rest of it came down to my own lack of understanding combined with loads of outdated misinformation online about this stuff!

Safari's lack of compression support for WebSockets, however, I still hold against them for now. 😉

If you ever have the misfortune one day to work with WebRTC at a low level like I have, here are a couple of the best resources I had found along the way:

I frequently have pretty vivid, detailed and crazy dreams and when I have a particularly interesting one, I write it down in my dream journal. The one I awoke from this morning left such a strong impression on me that I also feel like sharing it here on my blog!

It involves artificial intelligence, humanoid robots and morally bankrupt billionaire corporations. I like to think I can tell a good story, so I hope you'll check this one out!

So at the start of this dream, I had received a mysterious package in the mail. It was a medium sized box, maybe three feet tall, and had no return address but it was addressed to me, and it wasn't anything that I ordered myself.

Inside the box was a humanoid robot, covered in sleek white panels and looking like something that Apple might design. I powered it on, and it was able to speak with me in plain English and seemed to have a level of intelligence on par with ChatGPT. It had arms and legs and a robot face and everything. Presumably it could walk and move around as well. When it would enter an idle mode (maybe to recharge), it would "turtle up" and retreat into its shell, taking up less space in the room, a few feet tall. When standing, it was the size of a small adult human, maybe 5 feet tall or so.

An A.I. generated image of what this robot may have looked like, created on deepai.org.

On a different day in this dream, I was hanging out in the living room and I had a couple of friends over, and the robot was in its idle pose nearby. Suddenly, the robot whirred to life, and panels on its body opened up, and then I can only describe that it 'attacked' me. Out of these panels, a bunch of wires shot out quickly, some with needles on the ends, which all found their way to my body. One such needle went into my arm, into a vein, reminiscient of when you are put on an IV drip at a hospital. I don't remember where the other wires landed.

The robot then injected me with something: tiny little machines. Some of them assembled themselves together inside my body, forming a larger disc shaped structure a couple of inches across, and I could see it move around under my skin and could feel it if I touched it. Most of the other tiny machines navigated their way through my veins, arteries and other ducts in my body.

I could feel all of these things moving around, and especially around my lower abdomen. I've never experienced having kidney stones before, but I imagine it could be similar, though instead of painful, the movement felt ticklish and highly sensitive, similar to post-orgasm sensitivity, as they worked their way around my body.

Naturally, I was freaking the fuck out, but I was also afraid to respond too violently to all of this in case I broke the robot and left its work in a half-complete state, which could result in a much worse outcome for me. My friends in the room with me were watching all this happen and they were shocked and didn't know what to do either.

During this time, the robot was unresponsive and didn't respond to any voice commands. It was in its idle pose, but clearly busy computing something, with lights on its chassis flashing and everything.

After no more than 10 minutes or so, the robot was done and it disengaged from my body, retracting all the wires and tools back into itself, closing its panels and then transforming into its humanoid social form to talk to me.

Of course, I asked it what the fuck just happened. It explained to me that it is a healthcare robot and it had just finished giving me an examination. It told me I had some plaque buildup in my arteries that it cleaned out for me, and that while my kidneys were in the range of normal healthy function, they were a bit on the lower end of that range (whatever that meant).

Naturally, I had a whole lot of questions. This robot was made from some highly sophisticated technology that I had never seen the likes of before.

I asked it who built it. The robot replied that it was created by a major multi-billion dollar pharmaceutical company. It told me the name of the company, though I don't remember it now.

I asked it why it was delivered to my house. It explained that the company who created it was facing some problems: they weren't cleared yet to begin human trials on the new technology, and they were facing fines or regulations and were at risk of going bankrupt or forced to shut down. They mailed these units out for free to random people as a way to circumvent the law, get some human test subjects, and (hopefully) prove that the machine is safe and effective. I was just one of the "lucky" ones who received one of these units.

I asked the robot whether I can opt-out of their test program, and the robot said I could not. In the room with us was a small figurine of Baymax from Big Hero 6. I picked it up and showed it to the robot and said: "in the movie Big Hero 6, they had healthcare robots and you could say to the robot 'I am satisfied with my care' and they will power down and stay that way" and the robot told me that that wouldn't work on it, and that it was designed for long-term active care.

I asked the robot whether I could tell people about all of this - I had a good mind to, at the very least, write about this experience on my blog. It strongly advised against me telling anybody about it. It didn't threaten me or tell me what would happen if I disobeyed, and I know I never signed a non-disclosure agreement about this, but given the highly advanced technology it had just displayed for me, I decided I would comply with it - at least for now. But I had found the whole thing highly sketchy and unethical and was beginning to contemplate what my next steps should be, before finally waking up from this dream.

Anonymous asks:

What is your favorite memory of Bot-Depot?

Oh, that's a name I haven't heard in a long time! I have many fond memories of Bot-Depot but one immediately jumped to mind which I'll write about below.

For some context for others: Bot-Depot was a forum site about chatbots that was most active somewhere in the range of the year 2002-08 or so, during what (I call) the "first wave of chatbots" (it was the first wave I lived through, anyway) - there was an active community of botmasters writing chatbots for the likes of AOL Instant Messenger, MSN Messenger, Yahoo! Messenger, and similar apps around that time (also ICQ, IRC, and anything else we could connect a bot to). The "second wave" was when Facebook Messenger, Microsoft Bot Platform, and so on came and left around the year 2016 or so.

My favorite memory about Bot-Depot was in how collaborative and innovative everyone was there: several of us had our own open source chatbot projects, which we'd release on the forum for others to download and use, and we'd learn from each other's code and make better and bigger chatbot programs. Some of my old chatbots have their code available at https://github.com/aichaos/graveyard, with the Juggernaut and Leviathan bots being some of my biggest that were written at the height of the Bot-Depot craze. Many of those programs aren't very useful anymore, since all the instant messengers they connected to no longer exist, and hence I put them up on a git repo named "graveyard" for "where chatbots from 2004 go to die" to archive their code but not forget these projects.

Most of the bots on Bot-Depot were written in Perl, and one particular chatbot I found interesting (and learned a "mind blowing" trick I could apply to my own bots) was a program called Andromeda written by Eric256, because it laid down a really cool pattern for how we could better collaborate on "plugins" or "commands" for our bots.

Many of the Bot-Depot bots would use some kind of general reply engine (like my RiveScript), and they'd also have "commands" like you could type /weather or /jokes in a message and it would run some custom bit of Perl code to do something useful separately from the regular reply engine. Before Andromeda gave us a better idea how to manage these, commands were a little tedious to manage: we'd often put a /help or /menu command in our bots, where we'd manually write a list of commands to let the users know what's available, and if we added a new command we'd have to update our /help command to mention it there.

Perl is a dynamic language that can import new Perl code at runtime, so we'd usually have a "commands" folder on disk, and the bot would look in that folder and require() everything in there when it starts up, so adding a new command was as easy as dropping a new .pl file in that folder; but if we forgot to update the /help command, users wouldn't know about the new command. Most of the time, when you write a Perl module that you expect to be imported, you would end the module with a line of code like this:

1;

And that's because: in Perl when you write a statement like require "./commands/help.pl"; Perl would load that code and expect the final statement of that code to be something truthy; if you forgot the "1;" at the end, Perl would throw an error saying it couldn't import the module because it didn't end in a truthy statement. So me and many others thought of the "1;" as just standard required boilerplate that you always needed when you want to import Perl modules into your program.

What the Andromeda bot showed us, though, is that you can use other 'truthy' objects in place of the "1;" and the calling program can get the value out of require(). So, Andromeda set down a pattern of having "self-documenting commands" where your command script might look something like:

# The command function itself, e.g. for a "/random 100" command that would

# generate a random number between 0 and 100.

sub random {

my ($bot, $username, $message) = @_;

my $result = int(rand($message));

return "Your random number is: $result";

}

# Normally, the "1;" would go here so the script can be imported, but instead

# of the "1;" you could return a hash map that describes this command:

{

command => "/random",

usage => "/random [number]",

example => "/random 100",

description => "Pick a random number between 0 and the number you provide.",

author => "Kirsle",

};

The chatbot program, then, when it imports your folder full of commands, it would collect these self-documenting objects from the require statements, like

# A hash map of commands to their descriptions

my %commands = ();

# Load all the command scripts from disk

foreach my $filename (<./commands/*.pl>) {

my $info = require $filename;

# Store their descriptions related to the command itself

$commands{ $info->{'command'} } = $info;

}

And: now your /help or /menu command could be written to be dynamic, having it loop over all the loaded commands and automatically come up with the list of commands (with examples and descriptions) for the user. Then: to add a new command to your bot, all you do is drop the .pl file into the right folder and restart your bot and your /help command automatically tells a user about the new command!

For an example: in my Leviathan bot I had a "guess my number" game in the commands folder: https://github.com/aichaos/graveyard/blob/master/Leviathan/commands/games/guess.pl

Or a fortune cookie command: https://github.com/aichaos/graveyard/blob/master/Leviathan/commands/funstuff/fortune.pl

After we saw Andromeda set the pattern for self-documenting commands like this, I applied it to my own bots; members on Bot-Depot would pick one bot program or another that they liked, and then the community around that program would write their own commands and put them up for download and users could easily download and drop the .pl file into the right folder and easily add the command to their own bots!

I think there was some effort to make a common interface for commands so they could be shared between types of chatbot programs, too; but this level of collaboration and innovation on Bot-Depot is something I've rarely seen anywhere else since then.

We had also gone on to apply that pattern to instant messenger interfaces and chatbot brains, as well - so, Leviathan had a "brains" folder which followed a similar pattern: it came with a lot of options for A.I. engine to power your bot's general responses with, including Chatbot::Alpha (the precursor to my RiveScript), Chatbot::Eliza (an implementation of the classic 1970s ELIZA bot), and a handful of other odds and ends - designed in a "pluggable", self-documenting way where somebody could contribute a new type of brain for Leviathan and users could just drop a .pl file into the right folder and use it immediately. Some of our bots had similar interfaces for the instant messengers (AIM, MSN, YMSG, etc.) - so if somebody wanted to add something new and esoteric, like a CyanChat client, they could do so in a way that it was easily shareable with other botmasters.

For more nostalgic reading, a long time ago I wrote a blog post about SmarterChild and other AOL chatbots from around this time. I was meaning to follow up with an article about MSN Messenger but had never gotten around to it!

A discussion thread I got pulled into on Mastodon had me suddenly nostalgic for something we once had on the Internet, and which was really nice while it lasted: the Extensible Messaging and Presence Protocol, or XMPP, or sometimes referred to by its original name, Jabber.

What kicked off the discussion was somebody asking about a more modern "decentralized chat platform" known as Matrix (not the movie). A lot of people online talk about how they like Matrix, but I one time had a go at self-hosting my own Matrix node (which I feel I should rant about briefly, below), and the discussion turned back towards something that we used to have which was (in my opinion) loads better, XMPP.

One of the many things I get really interested in is the nature of "reality" or at least the conscious parts of it (e.g. my perception of reality). The mind is such a curious and powerful thing -- like how none of us has ever actually 'seen' Objective Reality, because our brains are trapped in our dark skulls and all we really get are electrical signals sent by our eyes and other sensors. The brain then makes up all the stuff you see and call reality: a multimedia simulation based on what your brain thinks is going on out there in the world. We've all heard questions like "is my green, your red?" and how you'll never know for sure what somebody else sees in their reality.

In recent years I have been getting into meditation and really paying attention, really studying the way my mind works and I have found a few interesting things that I haven't seen talked about much online. I am currently suspicious that there is a connection between lucid dreaming, closed-eye visuals during wakeful consciousness, and the place in between the two: psychedelic visuals, which you can apparently just meditate your way into seeing, no entheogens required.

Let's start with the easy one. When I close my eyes and look into the darkness of my eyelids, I frequently will see some shapes and colors. From what I've heard from other people, this seems fairly common - you might see these too.

My regular closed-eye visuals tend to just be vague, formless blobs of color, maybe a blue blob here and a green one there. All kind of meandering around on their own, but very low resolution. Maybe they might vaguely form a face or some recognizable shape, but just loosely -- like seeing shapes in the clouds -- nothing very exciting.

But spoiler alert: they can get much more exciting.

One night, I woke up from dreaming at maybe 3:00 in the morning (who knows), to get up and use the bathroom as one does. When I got back in bed I closed my eyes and I decided to look for my closed eye visuals (often if I'm not deliberately trying to see them, they go unnoticed by me and I think I just "see" blackness with my eyes closed).

I was expecting to see the usual amorphous blobs, but what I instead saw blew me away: my closed eye visuals (henceforth called CEVs) were breathtakingly sharp, vivid, colorful and crisp. I was seeing a colleidoscope of random images flashing before my eyes. It reminded me a lot of the Marvel movie opening logo. But every image I saw was just so crystal clear, with sharp lines and edges and vivid colors -- a far cry from the usual vague shapes my CEVs take on during the daytime.

I attribute this to the fact I had woken up from dreaming (REM sleep) and my brain was still close to that dream state. I have a suspicion that CEVs have a whole spectrum of forms they take: the vague blobbies is on one end of the spectrum, and full-color life-like hallucinations that we call "dreams" are at the opposite end.

And in between those two poles are other states that some people are familiar with.

A couple of years ago, quite by accident, I managed to meditate my way into seeing psychedelic visuals over top of reality. And you can probably learn to do this, too. I will save you the long story (I had written about it before if curious) and cut straight to the chase -- here is how I've been able to consistently pull this off on command.

The trick is to just lock your eyes onto as fine a point as you can manage, and resist the urge to twitch your eyes even as your vision begins to play tricks on you. Many of you have probably experienced this much already: you know if you stare at a thing for too long, your vision plays tricks and things start disappearing or acting weird because of the sensory satiation, and darting your eyes will "reset" your vision to normal. But try and resist the urge to dart your eyes and just let your visuals do what they shall.

What happens next for me is: I will begin to see my closed eye visuals project themselves over top of reality, with my eyes open. The blobby, amorphous shapes I see with my eyes closed appear while they're open. But then my CEVs will begin to "play off of" or interact with my reality: where the border of one of the blobs touched a ridge on my ceiling that I was staring at, the ridge would wiggle or bounce back, as if my CEVs are interacting with it.

These visuals slowly ramp up and I'll begin seeing geometric patterns, fractals, lots of colors, and as these fractals interact with the scene I am staring at, they get everything to start wiggling and dancing. It can take me about 30 minutes into my meditation before I am seeing fully, 100% authentic, height of the strongest acid or mushroom trip I'd ever been on, visuals projected out onto whatever I was staring at during my meditation.

And when I've decided I had enough: I just look somewhere else and snap! everything is back in its place, in much the same way that darting your eyes during the earliest part of all of that "resets" your vision to normal.

Tell me if this sounds familiar: when I was a kid I would sometimes be woken up from a really good dream and wished I could have stayed in my dream a little bit longer to see how it ended. I could try and "make up" the ending myself, daydream how I think it might have gone, but it never felt right -- when you're in a dream, it's like your subconscious is telling you a story, and you can't quite predict what's going to come next. There's something magical about dreams that when I try and make up the ending myself, it doesn't come out right.

Well -- and I don't know exactly when this started, but it was in recent months at least -- I have somehow gained the ability to let my imagination wander freely and I can actually let my dreams finish themselves out, autonomously, after I wake up and I'll be fully aware I'm awake (and feel my body in bed, be able to hear my surroundings) but still visually see and hear the dream at the same time.